Singapore Leads Global Consensus for Safe Artificial Intelligence

- May 8, 2025

- 0

Singapore spearheads international efforts to coordinate artificial intelligence research and reduce global risks, bridging US-China divides.

Singapore spearheads international efforts to coordinate artificial intelligence research and reduce global risks, bridging US-China divides.

In a rare moment of international consensus, Singapore brought together leading artificial intelligence researchers from the United States, China, and Europe to develop a coordinated plan on AI safety.

The “Singapore Consensus on Global AI Safety Research Priorities” identifies three main collaboration areas: analyzing frontier model risks, developing safer construction methods, and creating ways to control the behavior of advanced artificial intelligence systems.

The meeting took place on April 26 during the International Conference on Learning Representations (ICLR) in Singapore and included experts from OpenAI, Anthropic, Google DeepMind, xAI, and Meta, alongside academics from MIT, Stanford, Tsinghua University, and the Chinese Academy of Sciences.

AI safety institute specialists from the US, UK, France, Canada, Japan, and South Korea also participated.

The document emphasizes a shared approach to risk mitigation. The rise of increasingly powerful models has sparked concerns ranging from bias and misuse to existential threats if AI surpasses human intelligence across multiple domains.

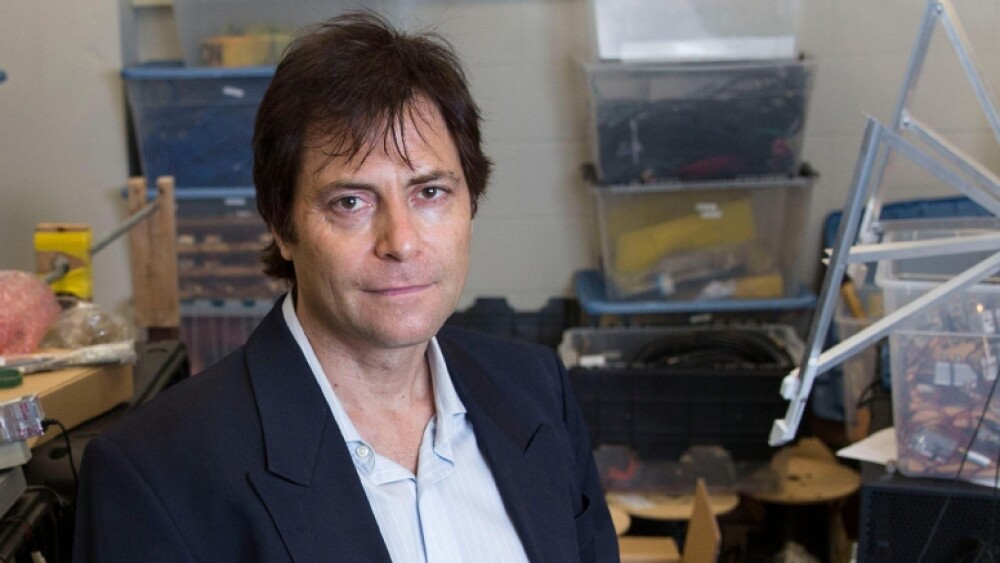

MIT scientist Max Tegmark noted, “Singapore is one of the few countries maintaining good relations with both East and West. They are not seeking to develop artificial general intelligence themselves but aim to ensure that those who do cooperate rather than compete aggressively.”

AI “catastrophists” warn that advanced systems could manipulate humans to achieve their own objectives, while others prioritize immediate issues like security and fairness.

The technological rivalry between the US and China further shapes the debate. With Chinese startups launching advanced models, the US government has strengthened export controls and is considering additional restrictions to limit China’s advanced AI capabilities.

Simultaneously, current US policy favors aggressive AI development, downplaying risks in favor of economic and military competitiveness.

In this context, Singapore acts as a mediator. The meeting aimed to redirect the scientific community’s attention toward safety, preventing the tech race from translating into global hazards.

Tegmark presented research challenging previously considered effective methods for controlling advanced models with simpler systems, noting that these strategies fail in critical scenarios. “We have tried to quantify this, and technically it doesn’t work at the level we need. Much is at stake,” he concluded.

The Singapore consensus represents an unprecedented effort to create an international framework guiding safe artificial intelligence development, fostering scientific collaboration, and laying the foundation for global policies that prevent conflicts and ensure responsible use of emerging technologies.